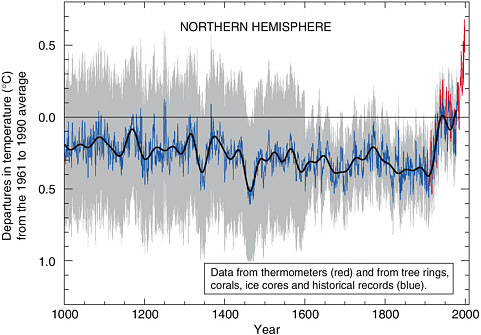

When measuring climate, this rule seems to be be ignored. The precision of modern thermometers is between 0.2 and 0.6 of a degree Celsius. But temperature records go back to times before that precision. How accurate were the thermometers before our current digital ones? Were they placed across the globe sufficiently to give a real idea of global climate? Probably not--especially if we go back before the 1900's. (Are they even so now? Most are placed in North America and Western Europe. Much fewer are in Asia, Australia and South America.) In the graph below from the UN's IPCC (International Panel on Climate Change), it shows direct temperature records go back to the 1850's and implies they are accurate to within a less than 10th of a degree--better than today!

As we go back to times before someone had an actual thermometer placed at a station, scientists use "proxy data" such as tree rings, marine sediment cores, or ice cores to measure the temperature. These are not direct measurements, but rather indirect measurements based on assumptions. How accurate are those indirect measurements? Frankly, we really don't know. We can't go back thousands of years and do a real comparison. It is an assumption--probably based on current models, but are they accurate withing a degree? Less than a degree?

Northern Hemisphere Temperature Reconstructions by the UN's IPCC:

To be clear, I am not denying that there does seem to be a slight (half a degree) temperature increase--which is less than the change than you get from a slight breeze--it's the claim that the increase has never been faster. Even accepting the graph above at face value, there are other parts that have changed quickly, but again, there is an assumed precision here that is not provable.

The false assumption of precision is accepted by the media. When one article declares, "Global temperature rise is fastest in at least 11,000 years, study says," it is obvious that someone is engaging in sensationalism. In this particular article, it admits that the temperature records are "reconstructed temperatures records" which has "natural variability over the study's time span accounts for roughly 1 degree C." So the assumption is that "reconstructed" temperatures are accurate withing one degree and that one degree is "natural variability."

Then the article says later:

According to the reconstruction, global average temperatures increased by about 0.6 degrees Celsius (1 degree Fahrenheit) from 11,300 to 9,500 years ago. Temperatures remained relatively constant for about 4,000 years. From about 4,500 years ago to roughly 100 years ago, global average temperatures cooled by 0.7 degrees C.So reconstructed data from tree rings, ice cores and other indirect proxy data is accurate within a 10th of a degree Celsius?! That's again better than our actual direct measurements today! Remarkable.

In addition to the accuracy of the temperatures, they are also using proxy data to determine times. Today we know what time of day the measurements are taken and determine a yearly average. Can we be so accurate to determine what year a marine sediment was or an an ice core? It is bad science to suggest we can go from direct temperature readings to indirect temperature reconstruction and claim we have a continuous, accurate record showing it's getting warmer faster than ever.

Which side is the one that is ignoring science?

Related articles:

- How to make good scientific measurements (and how not to)

- Surface Temperature Reconstructions for the Last 2,000 Years (2006)

- Loehe Vindication

No comments:

Post a Comment